Your developers are working at break-neck speed to build AI-powered solutions, right?

Your customers crave the increased efficiency and productivity they deliver, right?

In the unified communication space, this is surely a formula for providers’ long-term prosperity. After all, AI is likely only to get smarter. However, there’s a ‘but’. Regulation is coming, and for UC vendors, that means a whole new set of rules are soon likely to influence the art of the possible.

In principle, most agree that it is in everyone’s interests for international standards to apply to AI innovation. But having the ability to understand, interpret, and adhere to what is certain to be a complex plethora of globally-varied regulations will be a tough challenge that UC providers will have to face.

The opportunity IS huge, and the direction of travel IS only one way. It just means that partnering with governance experts that are already ahead of the curve is vital.

“A critical intersection of UC, AI, and data protection is imminent – it’s super-important that vendors and providers are able to take into consideration all the ethical concerns, but also be able to comply with laws that already exist, as well as the many that will do so soon,” says Dr Scott Allendevaux, senior practice lead at UC professional services practice Allendevaux & Company, whose comprehensive AI Management capabilities have the smarts to keep solution and platform innovators compliant.

“Data protection and business continuity compliance have long since been essential for any UC provider, and now AI compliance is part of that piece.”

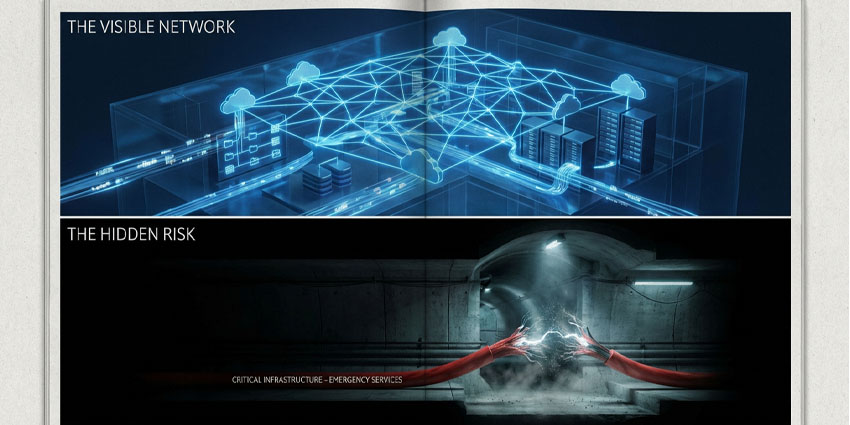

Indeed, in what Allendevaux describes as the “AI gold rush”, the legal frontier is evolving rapidly around the world. Various territories are now governed by various statutes and, helpfully, the internationally-recognised ISO 42001 has been established as a blueprint for responsible AI deployment. Importantly, it takes into consideration the management of risk when designing and building AI-powered UC tools, and the potential for regulation breaches to unintentionally occur.

“Providers and vendors want to innovate and push the limits in order to be competitive, but understanding the risks is vital,” says Allendevaux.

“ISO 42001 provides a process via which to systematically workflow and map how data moves through an AI-powered communication ecosystem that might comprise multiple integrations and APIs. It covers risk of unintended data breach around transparency, automation, and explainability, and levels are represented in metric terms. The results can be quantified and given back to the software developers to help them design better products. Because of the nature of constant innovation, an AI Management Plan should be a living, breathing thing, so regular compliance testing is essential. AI compliance management should be a continuous improvement life cycle, not something that is done once or is a checkbox exercise. It should be a way of working, a way of thinking; built into your overall development and design processes.”

Allendevaux believes AI-related data breaches will become more common and prompt regulatory authorities to take stringent measures in the near-term as the technology continues to encroach on fundamental privacy rights. As data collection grows exponentially through various means, including the Internet of Things, the threats only loom larger.

“Data protection compliance is a legal requirement but, more importantly, it is an ethical imperative,” says Allendevaux. “There is one sure-fire way to doom the reputation of your company, and that is to play fast and loose with personal data. If left unchecked, AI could be the largest threat to personal privacy ever witnessed. Assuring data privacy should not be a cat-and mouse game of developers trying to stay one step ahead of regulators.

It should be a partnership based on trust between developer and user, with regulatory authorities behind the scenes setting the standards and expert professional services firms providing the crucial real-world, day-to-day compliance management.”

To learn more about how Allendevaux & Co can help your and your customers’ businesses manage the risk of AI-powered innovation, visit the website.