Every leader wants to know their collaboration tools are “working”. We all want evidence that platforms are boosting productivity, efficiency, and creativity. Otherwise, all the money companies spend on copilots, meeting tools, and chat apps starts to feel pointless.

This is where it goes sideways. Managers start watching people instead of work, and platforms like Microsoft Teams make that possible, right down to tracking location. The moment a team senses that shift, the tone changes. People do what they think looks right, not what actually helps.

They focus on filling calendars, keeping status lights green, and hiding anything that might not follow standard policy. Collaboration analytics start to lie at that point, and teams start to burn out.

Back in 2023, ExpressVPN even found 78% of remote workers feel stress or anxiety knowing they’re being monitored. One in three said they’d take a pay cut to avoid workplace surveillance.

Here’s the irony: the more organizations obsess over collaboration metrics, the less truthful those signals become. That’s why measuring without surveillance is so important.

Further Reading:

- The Only UC Analytics that Actually Matter

- Is Poor Communication Costing More than You Think?

- Human-AI Collaboration Metrics Worth Measuring

What Is Collaboration Analytics?

Collaboration analytics is all about using data drawn from collaborative systems to understand how teams actually work together. Some companies think it’s about adding up the number of meetings that happen or messages that get sent. It’s not. You’re trying to figure out where teamwork goes off the rails.

Look at a typical project. A decision gets discussed in a meeting. Someone writes a recap. A few days later, the same question appeared again in chat because two teams walked away with different interpretations. Work pauses while people clarify what was meant. None of that shows up clearly in activity dashboards, even though it’s where most coordination problems live.

Collaboration analytics focuses on those patterns. How long does it take for discussions to turn into decisions? How often topics get revisited after they were supposedly settled? Where work slows down because a handoff between teams wasn’t clear.

The signals come from the same tools everyone already uses. Meeting transcripts, message threads, task updates, and project timelines all leave traces of how coordination unfolded. When those traces are examined together, they show where communication helped the work move forward and where it just created confusion.

Why Measuring Collaboration is so Hard

Measuring collaboration, like tracking productivity, isn’t easy because work is messy. It’s not a linear process; it’s a blur of half-formed ideas, handoffs, revisions, decisions that happen in meetings, then change in chat three hours later.

That messiness is exactly why collaboration analytics so often drift toward the wrong signals. Activity is visible, but behavior isn’t. Platforms surface what’s easy to count, not what’s meaningful to understand.

Hybrid work made this worse. Microsoft’s 2025 Work Trend Index found knowledge workers are interrupted roughly every two minutes during core hours. The most “connected” employees face hundreds of pings a day. When leaders see that volume, it looks like engagement. It’s really just the path to burnout.

AI distorts things even more. Meeting summaries, transcripts, and searchable conversations are all useful, but also incomplete. Once collaboration turns into a permanent record, people adjust what they say. Not because they’re hiding something, but because nobody wants a half-baked thought frozen in time. That tension shapes behavior.

This is why so many collaboration metrics feel unsatisfying. They capture noise, not progress. They tell you where people were, not whether decisions landed or work moved forward.

Unfortunately, when work feels fragmented and exhausting, organizations often respond by tracking harder instead of asking why coordination is failing in the first place.

Why Do Employees Worry About Surveillance in Collaboration Analytics?

Employees worry about surveillance in collaboration analytics because measurement can turn into monitoring far too quickly. It’s not just that staff are concerned about companies capturing too much “private data”, they’re worried about what that data will mean.

Will participating in fewer meetings stop them from getting promotion opportunities? Will fewer messages sent in chat show up on their performance review? Could lower use of a specific AI tool lead to micromanagement from a supervisor?

When teams feel like they’re “under surveillance”, they start “acting”, trying to show leaders what they think they want to see to protect themselves from negative backlash. Slack once found that 63% of workers make an effort to keep their status active even when they’re not working.

Psychological safety starts to suffer, too. People share fewer opinions and disagree less because they don’t want to be tagged as the person who “causes problems”. That’s particularly true in the age of AI, when people know the “record” of collaboration might outlive the context, they start filtering themselves. People speak differently when they feel assessed.

None of this means leaders should stop paying attention to their workers, or that they should stop investing in collaboration analytics. They still need the right data, not just for compliance and security reasons, but for guidance on how to improve the employee experience overall.

The trick is finding the right balance, knowing how to “check in” without spying.

How Can Companies Measure Collaboration ROI Effectively?

Measuring collaboration ROI effectively today means changing how you look at the metrics. It’s not just about adding up quantitative metrics anymore. It’s about taking a broader look at collaboration in general, without stepping into surveillance.

Leaders need to stop watching people and start studying how work behaves. Collaboration analytics should tell you where coordination helps or hurts, where decisions slow down, and where handoffs get messy.

Think about what actually derails teams. It’s rarely effort. It’s friction. A decision that keeps getting revisited. A dependency that no one owns. A meeting that produces notes but no next step. These patterns repeat across teams, which is exactly why they’re measurable without pointing a finger.

This is where most collaboration metrics can struggle. They sit too close to the individual. System-level signals sit farther back. They show flow, blockage, and rework. Plus, because they’re aggregated, people don’t feel watched. They stay honest.

You don’t fix collaboration by grading people. You fix it by redesigning the environment they’re working in.

Need help improving employee experience? Check out these five case studies that prove the ROI of employee experience.

Activity Metrics vs Behavioural Signals: What to Watch

If you want to understand why collaboration analytics so often disappoint, look at what they’re built to notice.

Activity metrics are tempting because they’re loud. Messages sent. Meetings attended. Time spent “active.” They create the illusion of control. They also flatten reality. A packed calendar might signal urgency or confusion. A fast reply might mean clarity, or fear of being seen as disengaged. These signals tell you someone is busy. They don’t tell you whether work is actually moving.

Behavioral signals show up in patterns, not counts. How often does a decision come back around after it was “final”? How long does it take for work to move from discussion to execution? Where do projects stall because one team is waiting on another to interpret the same information differently?

That’s the difference between shallow collaboration metrics and useful ones. One describes motion. The other explains friction.

You can see why this matters in hybrid teams. Exhaustion often comes from constant context switching, not a lack of effort. When analytics reward visibility, they amplify that problem. When analytics surface system friction, leaders can actually fix something.

This distinction also protects trust. Behavioral signals don’t single people out. They describe how the system behaves under pressure. Teams don’t feel graded, so they don’t game the data.

How Can Leaders Balance Visibility and Employee Privacy?

Once leaders accept that collaboration analytics should focus on systems, not individuals, the next question is obvious: how do you measure without people feeling watched? Plenty of tools already exist to help. Workplace management tools track engagement, collaboration apps like Teams capture insights into activity, and UC service management tools monitor licence usage.

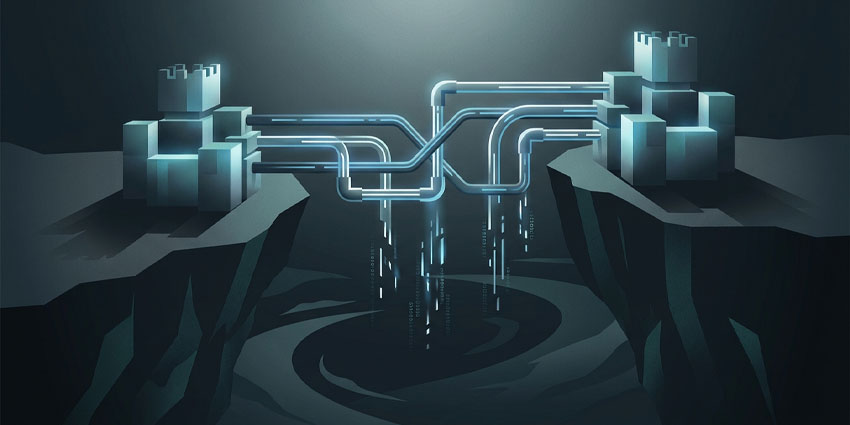

Even human capital management tools can share valuable insights into which employees are thriving and burning out. What matters is how companies turn that data into something they can use. Doing it without the surveillance vibe usually starts with three things:

- Aggregation: Insight should live at the team or workflow level, never the individual one. Patterns matter; outliers don’t. When leaders look at repeated friction across groups, like handoffs that stall and decisions that loop, they get something actionable without creating a blame target. That’s the difference between useful collaboration metrics and data people learn to fear.

- Anonymization: Remove names. Remove identifiers. Strip the temptation to zoom in. Microsoft has been explicit about this in its approach to organizational insights, using de-identified and privacy-protected views so leaders can see trends without tracking people. That design choice is the reason the data stays believable.

- Purpose limitation: This one gets ignored most often, and it’s where suspicion creeps in. If employees don’t know why something is being measured, or worse, suspect it might later be used for evaluation, they’ll change their behavior immediately. Transparency matters more than sophistication here. Say what insight is for. Say what it isn’t for. Then stick to it.

That’s when measuring collaboration actually works. Not because you collected more data, but because you stopped poisoning the signal.

What Metrics Actually Show Collaboration Effectiveness?

This is usually where people ask for a list of KPIs. Something they can screenshot and circulate. That instinct is understandable, and it’s exactly what breaks measuring collaboration all over again.

The better move is to change the questions. Look at:

- Decision latency: Not how many meetings happened, but how long it took for a real decision to stick. When the same topic keeps resurfacing, that’s not a healthy debate. It’s a signal that context, ownership, or clarity is missing. Microsoft’s research on the “infinite workday” shows how constant interruptions crowd out actual decision-making work. Meetings multiply. Progress doesn’t.

- Rework signals: Where does work quietly loop back because people didn’t leave the room or thread with the same understanding? Rework is a coordination issue. Zoom and Webex both acknowledge that AI summaries struggle with overlapping voices and domain language. That’s a useful reminder: activity artefacts don’t equal alignment. Behaviour does.

- Cross-team dependency friction: Where do handoffs stall? Where do teams wait on interpretations instead of inputs? Fragmentation and tool sprawl turn simple dependencies into slow leaks of energy. When collaboration analytics surface those patterns, leaders can redesign the system instead of chasing individuals.

None of this requires invasive collaboration analytics. Restraint works a lot better. When leaders focus on flow instead of visibility, collaboration metrics stop feeling like surveillance and start acting like a diagnostic tool.

Ethical Measurement is now a Leadership Priority

One thing to keep in mind is how this changes leadership behavior.

Every measurement choice sends a signal. When leaders track presence, they reward visibility. When they track speed, they reward interruption. When they track volume, they reward noise. None of that happens accidentally. It’s design, whether anyone admits it or not.

That’s why collaboration analytics now sit squarely in the leadership lane. Especially as AI gets folded deeper into everyday collaboration. Meeting summaries become “what happened.” Transcripts become memory. Search becomes authority. Whoever decides how those artefacts are used is shaping how people speak long before the meeting even starts.

There are real-world examples of this playing out. We’ve spoken before about how teams rolling out Microsoft Teams at scale saw dramatically higher adoption when executives modelled healthy collaboration behaviours themselves, instead of leaning on enforcement or monitoring. In one case, Concentrix reported a 48× increase in organic Teams adoption after senior leaders changed how they worked, not how they measured.

Ethical measurement isn’t about adding guardrails after the fact. It’s about choosing what not to observe. Choosing aggregation over attribution, patterns over profiles, and improvement over judgement.

This is also where collaboration ROI either compounds or collapses. Trust accelerates coordination. Fear slows everything down.

Collaboration Analytics: Insight Without Destroying Trust

Collaboration analytics aren’t problematic because of a lack of data. We just keep asking the wrong questions. We stare at activity because it’s comforting. It looks objective, it feels managerial, and it consistently tells us less than we think.

Over time, surveillance just diminishes trust and honesty. People comply with the rules they think they’re meant to follow and hide the rest, so the data flows, but the truth doesn’t.

The alternative isn’t softness or blind faith. It’s discipline. Measuring systems instead of people. Looking for friction instead of fault. Treating collaboration like what it actually is: a fragile, human process that breaks the moment it feels judged.

This matters because unified communications platforms now sit at the centre of how work happens. You can see this in our guide to what unified communications really means today.

If you care about measuring collaboration in a way that improves outcomes, the line is clear. Aggregate. Anonymize. Be explicit about purpose. Then resist the urge to peek behind the curtain.

You don’t get better collaboration by watching people harder. You get it by understanding how work actually moves, and fixing what gets in the way.

FAQ

How can organizations measure collaboration without monitoring individuals?

Shift the view up a level. Look at how work moves between teams rather than what one person did. Where do projects stall? How long does it take for a decision to stick? Patterns like that show coordination problems without turning dashboards into employee scorecards.

What data should companies avoid collecting in collaboration analytics?

Anything that feels like presence tracking. Location data, status-light monitoring, or minute-by-minute activity logs rarely explain why work slows down. They mostly teach employees to look busy. Once that happens, the data stops reflecting what’s actually going on.

How can collaboration analytics improve team productivity?

It shows where coordination breaks down. A decision that keeps resurfacing. A project stuck waiting on another team. A meeting that produces notes but no next step. When leaders see those patterns, they can fix the workflow instead of pushing people to simply work faster.

What tools help measure collaboration performance ethically?

Most companies already have them. Teams, Slack, and other collaboration platforms produce aggregated usage patterns. UC service management tools and workplace analytics platforms add another layer by showing how communication flows between groups rather than zooming in on individuals.

What are the risks of using surveillance-style collaboration analytics?

People start managing their appearance instead of their work. Calendars fill up. Status lights stay green. Conversations become careful because nobody wants their words misread later. The numbers may look healthy, but the signal behind them becomes far less reliable.