Anthropic is pushing Claude toward a more integrated way of working. With a new extension to the Model Context Protocol (MCP), Claude can open tools like Slack, Asana, Figma and Canva as interactive experiences inside the chat window. Instead of getting a text response and switching tabs, users can preview, refine and adjust work in place.

It’s a solid usability upgrade. It also reflects a broader shift in how AI is being productised: chat is becoming the command surface, and applications are becoming embedded workspaces.

But for enterprise IT and collaboration leaders, this announcement is only part of the story. The industry is already past the question of whether an assistant can connect to tools. The harder question is whether enterprise AI agents can be trusted to act. That means identity, permissions, governance and accountability.

MCP Apps improve the user experience, not the risk model

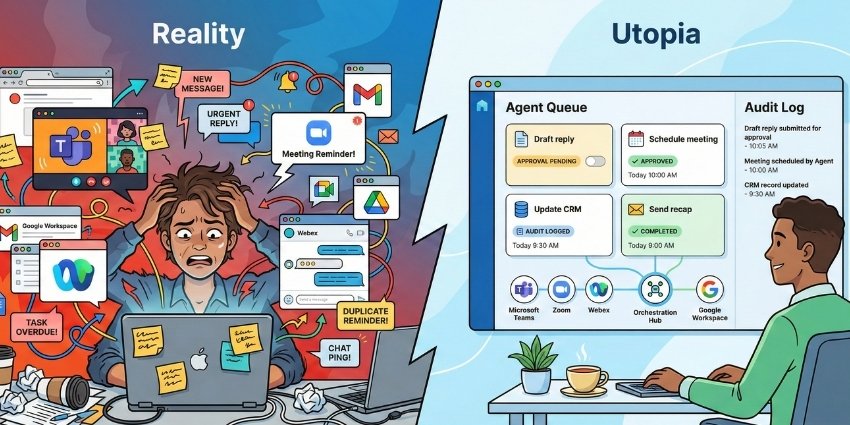

The in-chat app experience addresses a common weakness of earlier AI integrations. When assistants only return text, users have to copy and paste into the target application, then fix formatting, validate outputs, and deal with the gap between what the assistant suggested and what the app can actually accept.

Embedded, interactive apps reduce that friction. They also encourage review. A user can see a Slack message before it posts, or adjust a Canva deck before it’s exported and shared. In practical terms, that can cut rework and reduce simple mistakes.

This is why “apps inside chat” is gaining momentum across the market. People do not want a separate assistant sitting off to the side. They want work to move faster in the systems they already use.

Enterprise AI agents are now an identity and permissions challenge

Tool access is quickly becoming table stakes. The enterprise challenge is delegated authority.

Drafting a Slack message is low stakes. Posting into the wrong channel is not. Creating new spaces, inviting external guests, pulling customer data into a conversation, or triggering actions across connected systems can all carry compliance and security implications.

As soon as an AI agent can do more than draft, enterprises start asking different questions. Which identity is the agent using when it takes an action? Is it acting as the employee, as a bot identity, or as a service account? What permissions does it inherit, and can those permissions be scoped to a task and time-limited? Can admins restrict the agent to “draft only” modes, or require explicit approval before publishing?

MCP may standardise how tools and data are reached but it doesn’t automatically solve identity and governance. For enterprises, those controls are the foundation of safe deployment.

UC platforms turn AI agent governance into a frontline issue

This is especially relevant in unified communications. Collaboration tools sit at the centre of day-to-day execution. Decisions are made in threads. Files are shared in channels. Status updates become institutional memory. Customer information and operational detail often pass through chats and meeting follow-ups.

That also makes UC platforms a governance surface. Retention policies, eDiscovery requirements, information barriers and data loss prevention controls often live here. If enterprise AI agents become first-class actors inside these systems, governance cannot be an afterthought.

A slick embedded app experience is not enough. Security teams need visibility into what the agent did. Compliance teams need auditability. IT teams need control over what actions are allowed, and under what conditions.

The missing capability enterprises will pay for: proof

Enterprises don’t just want AI agents to generate content. They want proof that actions were correct.

In practice, that means operational discipline. When an agent produces an update, teams need to know whether it used the right data, referenced the right source, and completed the workflow properly. When something goes wrong, they need to trace it. That requires logs, execution histories and audit trails showing what was accessed, what was changed, and which permissions were used.

This is where many “agent” demonstrations fail once they meet real environments. A workflow breaks on step seven. An API returns an unexpected result. A permission is missing. The agent makes a confident move that is slightly wrong, and that slight wrongness gets amplified as it travels across systems.

Interactive MCP Apps can reduce errors by keeping users closer to the output, in context. But enterprise adoption depends on broader reliability and accountability. Observability and auditability are not optional extras; they are core requirements.

The bottom line

MCP is valuable infrastructure. It reduces integration friction and helps ecosystems scale. Embedded app experiences inside Claude also make AI-assisted workflows more usable and easier to review.

But enterprise AI agents will not be won on connectors alone. They will be won on identity, permissions, governance and proof. The vendors that succeed will be the ones that can make delegation safe — and demonstrate, in an audit, exactly what happened.