AI ROI has become the boardroom’s favorite two-acronym question and the enterprise’s most evasive two-acronym answer. As 2026 begins, the gap between AI ambition and operational reality appears to be widening across UC, collaboration, contact center, AV, employee experience, and work management, often for reasons that have little to do with the AI itself.

Jon Arnold, Principal Analyst at J. Arnold & Associates, offered a salient diagnosis to UC Today during the most recent Big UC Show. “AI is still more about disruption than innovation. It’s still very top-down driven.” That framing deftly contextualizes the cultural undertow beneath the hype. AI is being rolled out as a strategic mandate while employees experience it as yet another change program, one with unclear rules, unclear upside, and a very real downside when it goes wrong.

The numbers are stark. PwC’s recent 29th Global CEO Survey, which canvased 4,454 CEOs across 95 countries and territories, reports that only 12 percent say AI has delivered both cost and revenue benefits. Meanwhile, 56 percent say they have seen no significant financial benefit so far. It is the kind of statistic that escalates a tech story into a management story.

And it comes as the tech industry’s most influential executives are publicly urging companies to get on with it. At Davos, Microsoft CEO Satya Nadella warned that the AI boom “could falter without wider adoption,” arguing that “for this not to be a bubble by definition, it requires that the benefits of this are much more evenly spread.” In a separate recap of the same theme, he went further, saying that without real-world outcomes, “we will quickly lose even the social permission” to burn scarce energy-generating tokens.

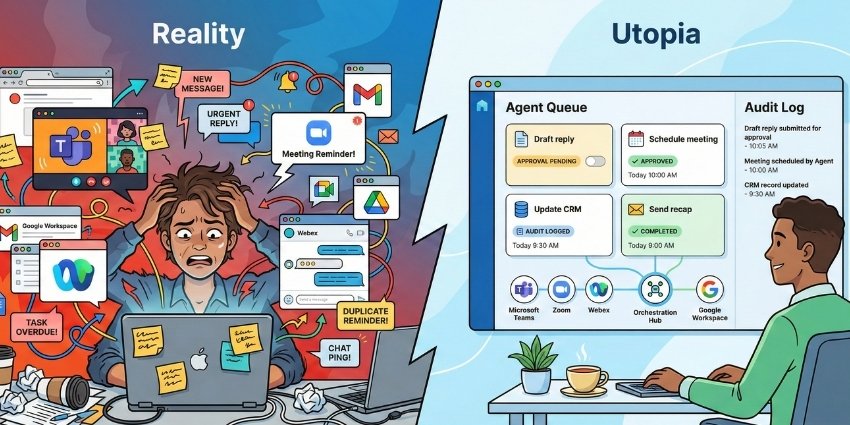

Inside companies, meanwhile, perceptions are diverging. A recent Section survey of 5,000 white-collar workers in large firms across the US, UK, and Canada reports a “vast” gulf between what executives believe AI is saving and what employees say it’s actually doing day to day. Almost four-fifths of C-Suite respondents said AI saves them at least 4 hours of work each week, while two-thirds of workers say it saves them 2 hours or less. Many workers also reported feeling overwhelmed about how to integrate it into their jobs.

If AI value is being measured largely from the top, the data suggests the bottom may not recognize the same reality.

It’s time for an early-2026 heat check: not on AI capability, but on the conditions required for AI ROI to stop being an aspiration and start being an operating metric.

AI ROI is Widening Into a “Leaders vs Laggards” Divide

Arnold’s view is unsentimental: “Yes, there’s definitely a gap. Personally, I think it’s going to get wider.” In part, he argued, PwC’s data reflects a familiar pattern of enterprises mistaking experimentation for transformation. The “Goldilocks” outcome, he noted, remains rare: “Getting both cost reduction and revenue growth, it shows only 12 percent are getting the best of both. That’s where you want to be with AI.”

But the more revealing number, he argued, is not the 12 percent at the frontier, but the mass in the middle. “The bigger wake-up call is the 56 percent in the middle reporting no tangible benefit.” For tech and C-Suite leaders, that “middle” often looks like this: AI licenses bought as a blanket layer across the workforce, a small set of pilots blessed as innovation theater, and a creeping realization that neither has a defensible business case yet.

Arnold insisted the root cause is often the wrong value proposition:

“Enterprise AI deployment isn’t just about cost reduction. That’s the buzzsaw mentality of ‘drive out costs, lay off people.’”

If the first story employees hear about AI is workforce reduction, adoption becomes the enemy of self-preservation. Resentment and distrust are unavoidable. The organization then spends months trying to convince people to use a tool they’ve been implicitly trained to fear.

The harder pivot is toward growth and differentiation. “More strategic AI is about revenue growth. We need to shift the narrative: AI value is more than cost reduction,” Anrold outlined. That shift is especially relevant in customer-facing domains, such as contact centers, field service, sales enablement, and customer success, where the upside shows up as conversion, retention, and better throughput, not simply fewer people.

Trust and Governance: AI ROI Can’t Scale Without Legitimacy

If the first barrier is misframed value, the second is permission, whether legal, ethical, or social. Blair Pleasant, President and Principal Analyst at COMMfusion, drew attention to a detail in the CEO findings that should unsettle any CISO or risk owner signing off on AI deployments:

“Only 51 percent of the respondents said that their organization has formalized responsible AI and risk processes.”

In other words, almost half of enterprises are still improvising governance while trying to industrialize usage.

Arnold is blunt about what that means for adoption. “Trust is what will make or break AI.” In workplace systems, such as UC, collaboration, EX, and knowledge tools, AI is not acting on clean, isolated datasets. It is embedded in conversations, meetings, recordings, and documents that carry commercial confidentiality, personal data, and regulated content. A single incident can freeze a program.

This is why he puts so much weight on transparency, not as a slogan but as an operating constraint. “It’s like justice: it can’t just be done, it has to be seen to be done.” Governance only changes behavior when employees can see it, understand it, and trust it. Otherwise, it becomes corporate wallpaper while shadow AI flourishes off-policy.

Dom Black, Principal Analyst at Cavell, added a parallel insight from Cavell’s buyer research. Productivity is now inseparable from constraint. “AI adoption and efficiency are closely chased behind as a priority around compliance,” he said. Enterprises are no longer choosing between speed and safety. They are being asked to deliver both simultaneously, under sharper regulatory scrutiny and louder customer demand for responsible behavior.

Culture and Training: Mandates Create “Shadow AI,” Not Outcomes

Craig Durr, Founder and Chief Analyst at The Collab Collective, observed the governance conversation and pushed it into the broader human context that many transformation programs avoid. “You’re using the word trust,” Durr said. “I wonder if people are trying to fix company culture challenges that might be inhibiting productivity, and it’s all being combined into a single confusing topic.”

AI, he suggested, is arriving in organizations already strained by post-pandemic expectations, including return-to-office friction, burnout, and the subtle loss of trust that comes from constant change.

Durr’s warning is about misplaced expectations:

“The expectation of this one technology, a very powerful technology, is that it’s somehow a silver bullet for everything wrong inside a company.”

When AI is sold internally as a cure-all, it becomes a disappointment engine. Every function hopes it will solve its bottlenecks, every leader expects immediate productivity gains, and every failure reinforces skepticism.

Black suggested that skepticism often produces not necessarily abstinence, but bypass. “There’s so much shadow AI usage” that enterprises end up with a paradox of high usage in pockets, low confidence at the top, and weak ROI proof everywhere. Employees who find value will keep using AI, but they may do it outside sanctioned tools if the official experience is clunky, constrained, or politically risky.

Pleasant’s view is that organizations have underinvested in the one lever that reliably changes behavior: enablement. “People aren’t getting the training they need when it comes to AI,” she said. Without training, mistakes become inevitable, especially in systems that touch sensitive data. “People need to know how to use it, how not to use it, what to use it for, and what not to use it for. That’s just not happening,” Pleasant added.

If 2026 is the year AI becomes a core workflow layer, then prompt literacy, verification habits, and safe data practice have to become baseline competencies, not optional extras for power users.

Measurement and the “Death by POC” Trap: AI ROI is Getting Lost in the Noise

AI programs don’t fail only because the technology underwhelms. They often fail because the organization can’t prove value quickly enough to sustain support, or can’t see value because it is happening in ways the metrics don’t capture. Black described the resulting cycle with candor: “We are currently in the death by POC stage of AI at the moment where everyone is trying different proofs of concepts, and obviously some of them are failing.”

What makes this stage corrosive is not failure itself, experimentation requires it, but accumulation without learning. Too many pilots are treated as isolated events rather than as instrumentation exercises designed to answer specific business questions. When POCs aren’t tied to measurable outcomes, they become expensive rehearsals.

Black’s most important point may be that many enterprises are mismeasuring the ROI they already have:

“Some of the tools that they put in place, they might not be getting the ROI from those, but actually, their employees are driving a lot of ROI personally for their jobs. It’s just not being tracked.”

That aligns uncomfortably with the WSJ’s executive-versus-worker perception gap; Leadership believes time is being saved, while many workers report it isn’t, or at least not in visible, reportable ways.

In collaboration and UC, the ROI may show up as fewer meeting cycles, faster decision-making, less time spent searching for context, and cleaner handoffs between teams. In contact centers, it may show up in reduced after-call work, higher QA scores, better containment, and improved agent retention. In EX and work management, it may show up in cycle time, rework, and throughput. The common denominator is measurement discipline. If AI changes the shape of work, it must also change the way work is measured.

Black argued the path forward is cultural as much as technical. “There needs to be a more open internal culture: how do we test things, try things, and talk to our employees, rather than mandating, ‘this is the tool we use,’” he said. Without that openness, organizations end up with the worst of both worlds, encompassing a top-down rollout that dampens initiative and a bottom-up reality that remains invisible to governance and ROI reporting.